|

|

|

|

My goal for this project was to alter the progression of video frames to match an audio accompaniment.

(in the form of copyright-infringing videos)

A ballerina dances to changing music.

A montage of clips - ballet, soldiers, children, and a flock of dogs.

View, download, and run code here.This paper from Abe Davis aims to accomplish a similar task. I found that my results looked a little better when I implemented the match-candidate detection from this paper, accounting for pitch changes and directional motion. This paper additionally creates tempograms to locally align audio and video rhythms, and has some pretty neat results.

The Wiggles show off their flashy car to the instrumental of REDMERCEDES. The left video is modified, while the right video is original.

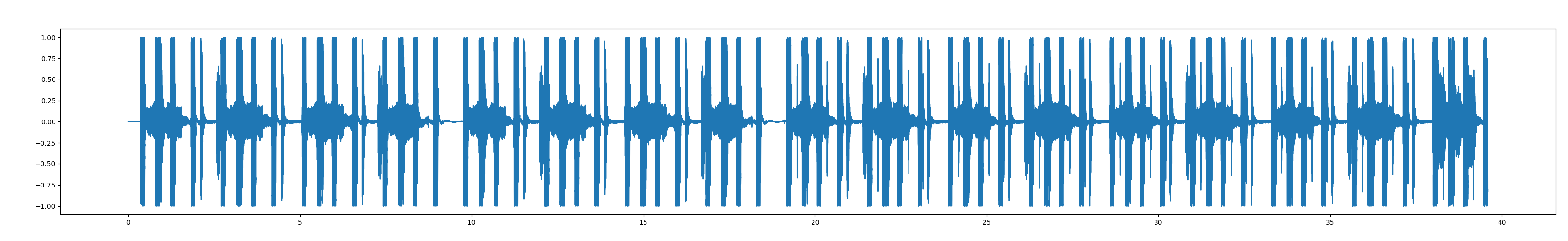

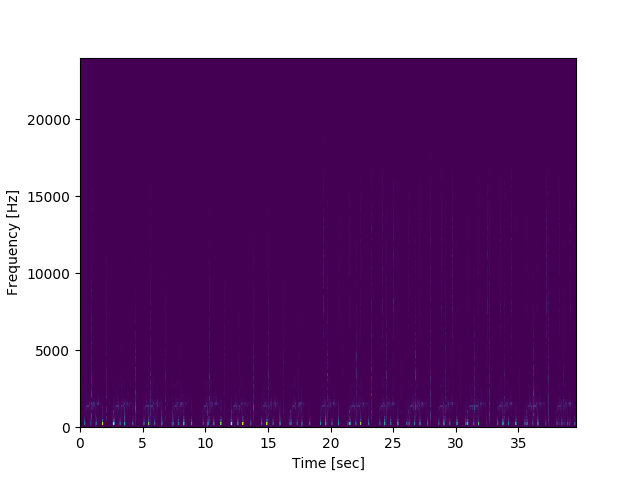

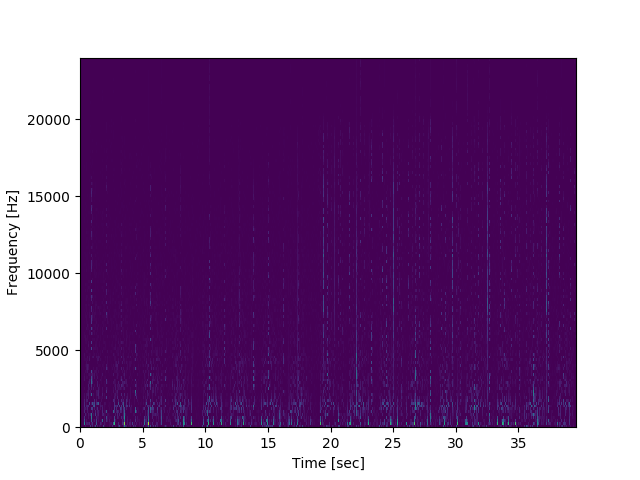

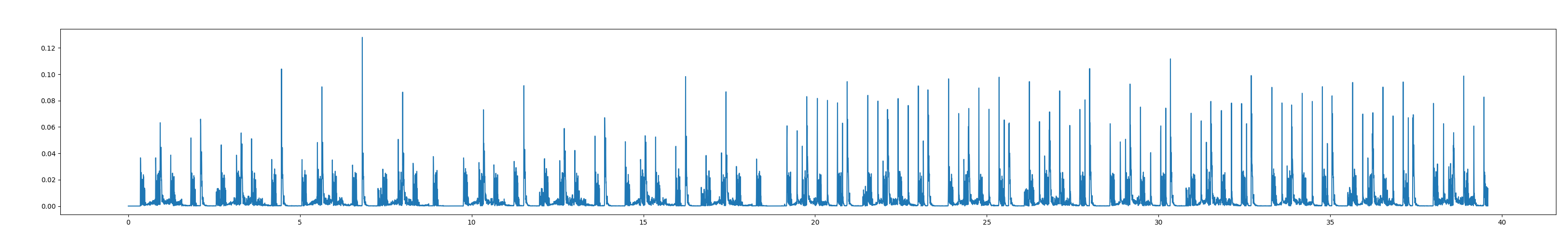

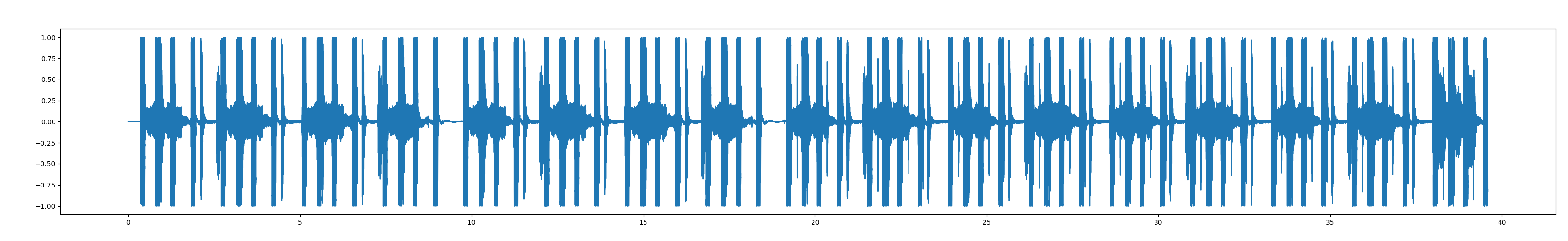

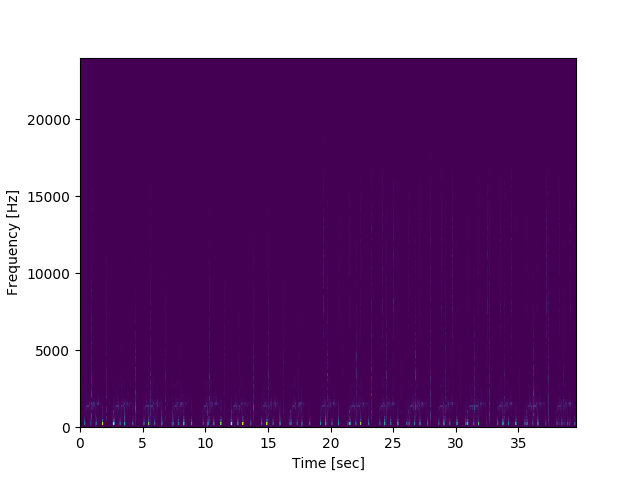

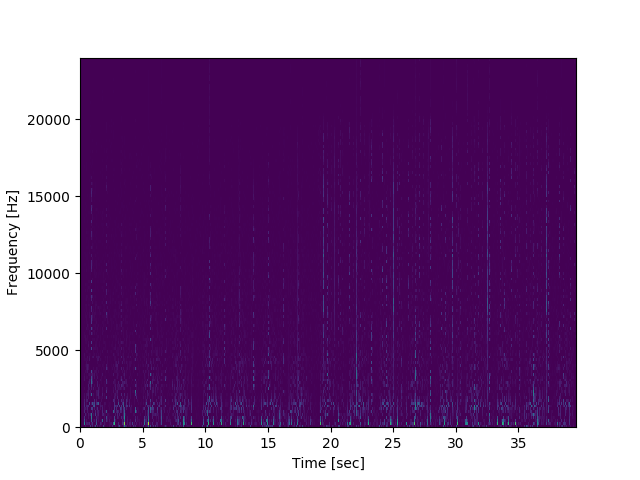

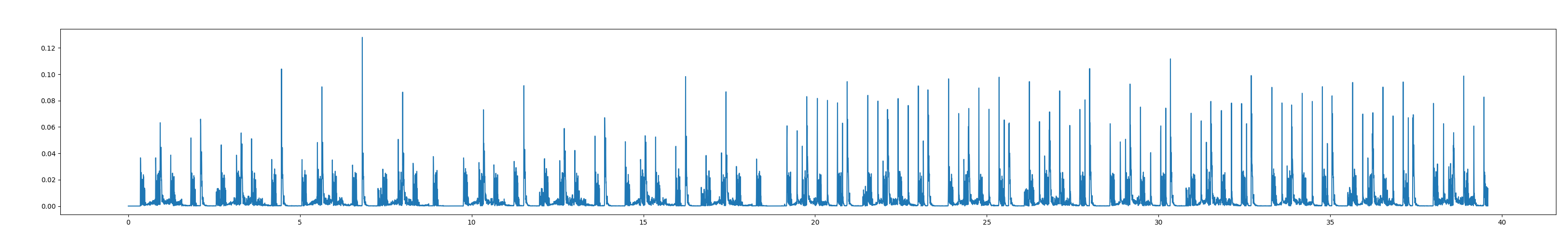

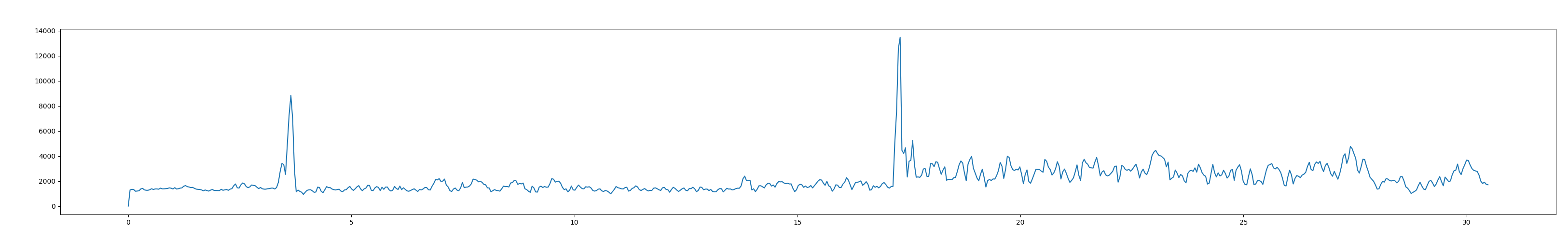

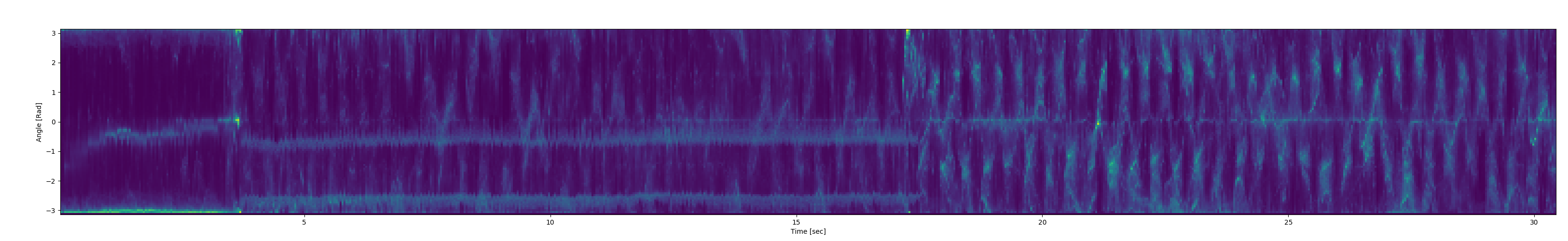

Compute the spectogram (complex, time-valued FFT) of the mono-audio signal. The spectral flux between time steps indicates change in both pitch and volume. Sum the positive frequency values across their axis to get a vector of beat-onset envelopes.

|

|

|

|

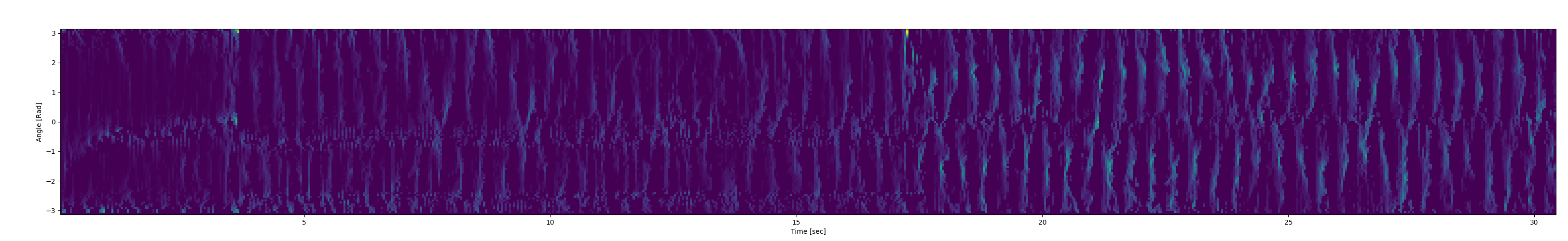

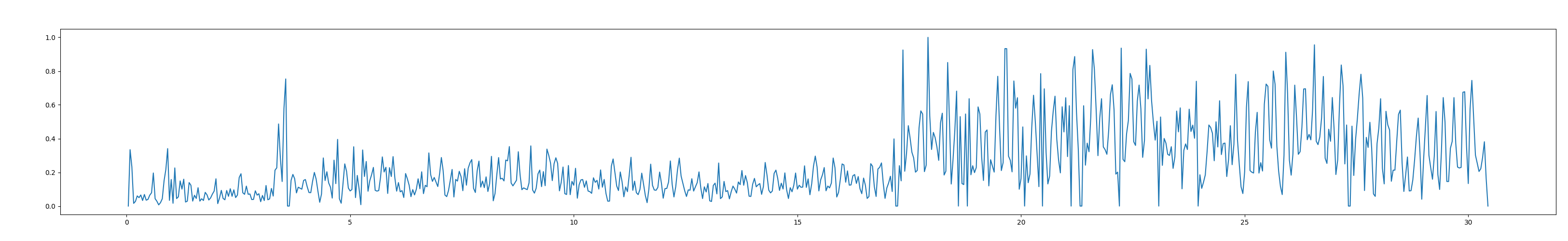

The process for video is analogous. Replace frequency with angles, and frequency strength with the magnitude of motion in that direction. Motion for each angle is created by binning and summing optical flow vectors for every pixel. Sum positive magnitudes to compute deceleration, apply a median filter to account for duplicated frames, and remove outliers that may indicate transitions to obtain impact envelopes.

|

|

|

|

Matching points are computed for both media types as a simple local maximum. The minimum distance between peaks is a constant factor of the sample rate. I found that peak detection on the impact envelopes yielded similar results to running peak detection with optical flow magnitude and using the falling edge.

|

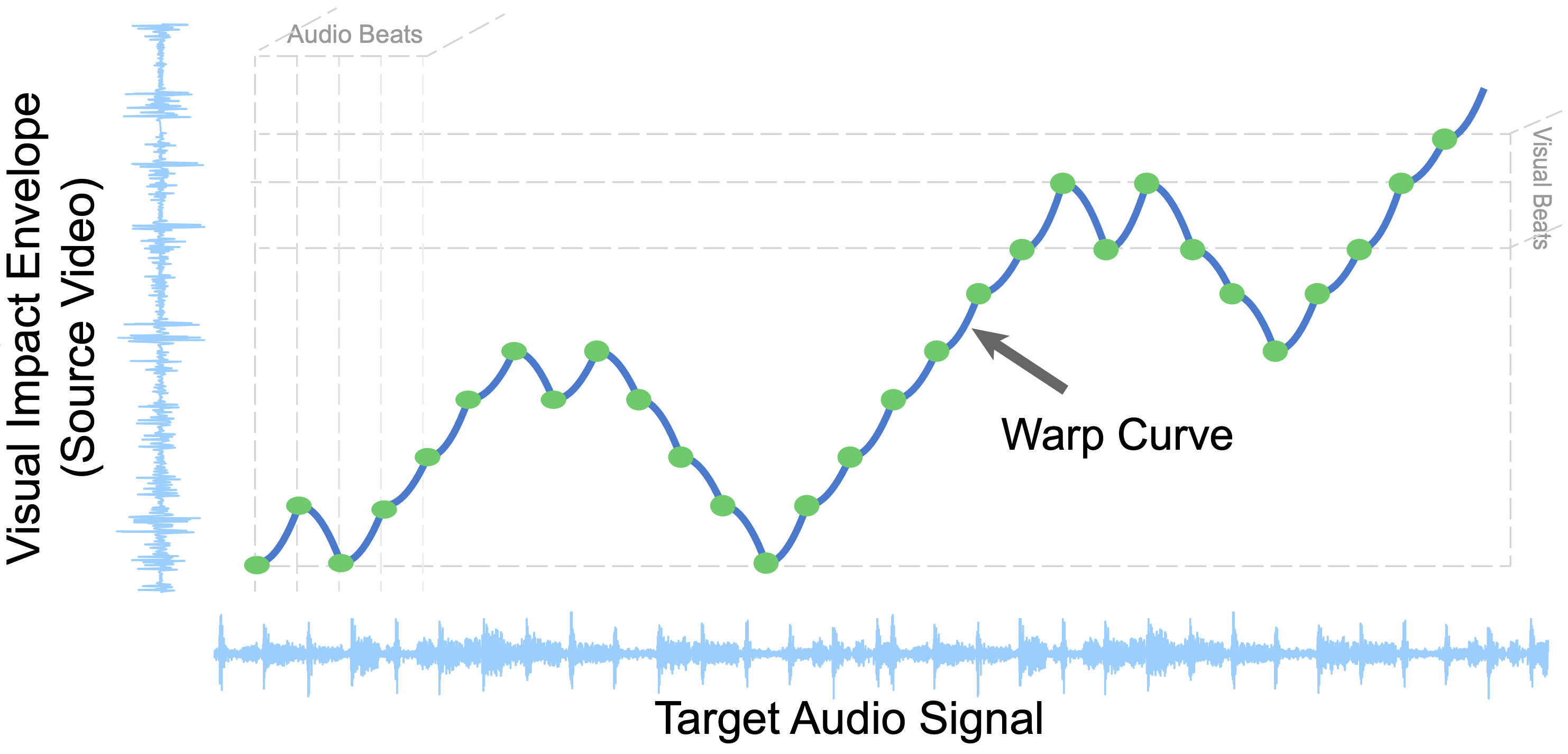

Match points were iteratively matched together, and constrained by bounds on video speed change. Audio was resized as necessary. Once match points were found, each section of video was reinterpolated and aligned with the audio. My frames were equally spaced temporally, but Abe Davis spaced frames using a 3rd-degree polynomial fitted to match times.

I have reached a result I am happy with, but I will continue to experiment with tweaks, and mostly try to copy stuff that Abe does.